Full Page Cache (FPC): Scaling Magento Part 1

Introduction

The most important and often ignored factor to scaling is the quality of code. Well written code will scale better. The next most important factor is perhaps caching. There are many types of caches that developers, managers and store owners need to understand. Full Page Cache (FPC) is seen by store owners as a magic solution to speed issues. Understanding the benefits and compromises of a caching mechanism is important to understand scaling.

FPC Options

Magento Enterprise 1.x and Magento Open Source & Commerve 2.x, both have a FPC module inbuilt.

There are many plugins available for Magento Community 1.x. Some hosting providers will help setup a Varnish based FPC with appropriate hole punching.

Magento 2 has two mechanisms for FPC – php based (called FPC) and varnish. Varnish is the preferred option for production due to the architecture and speed of response.

The discussion below applies to all these mechanisms as well as to Magento 1.x and Magento 2.

What is Full Page Cache (FPC)?

FPC is a cache of a full HTML page – except variable content such as a login status or items in cart or stock status of a product or sometimes even price of a product. When a hit is encountered – i.e. the page required is in the cache, FPC will return very fast compared to when there is a miss i.e. the page is not in the cache, which will require re-generating the content. FPC may store the cache in files, but more likely for maximum benefit, it will be stored in memory.

FPC affects resource utilization – memory and CPU. As with all caches, we trade memory for CPU time.

Traditional FPC stores the page in Magento cache and is a part of Magento. Varnish stores the page HTML after it has been generated and is not a part of Magento. It is a separate process.

What FPC is not!

We made it! Last talk by our @MageTitans brother about Magento 2 Fpc @tonegolf71 pic.twitter.com/3qEJ6bxgZX

— MageTitansIT (@MageTitansIT) June 9, 2017

Let us understand FPC better

- Memory needed to store the entire site in FPC

Let us say each page is 100KB and you have 10000 pages to cache. That would take about 1GB of RAM. The problem is when the number of pages or page size starts rising above this, the RAM requirement goes up. So, if you now had 20000 pages (result of each option in layered navigation for example), you would need 2GB or if each page was 120KB the 20000 pages would need 4GB. Pages are not just products – they are category pages as well. If layered navigation is added the pages multiply fast as each combination is unique and needs to be stored independently. If you start exceeding the RAM available, you need to decide what to do when you hit the memory limit. - Cache warming.

Cache warming is the process of automatically adding pages to the cache before a real visitor hit comes to the cached page. When a cache is cleared, you may need to warm the cache to make FPC effective early. Cache warming uses a crawler to artificially visit pages of a site. A typical crawler will recursively crawl the site starting from the home page. This sounds logical but here are some things to think through- If possible find the most likely pages you need to be in the cache and warm the cache with only those pages. This will give the maximum benefit.

- If you cannot fit all the pages in memory, the use of crawling to warm the caches becomes a problem – they will recycle pages out of memory at random, not based on the end user popularity of the pages.

- When the cache is being warmed your resource requirement in terms of CPU will rise as both the crawler and real traffic are being served.

- If possible crawl the site in parallel – the earlier the pages get cached the more likely a visitor to a page will already be in the cache (scoring a hit).

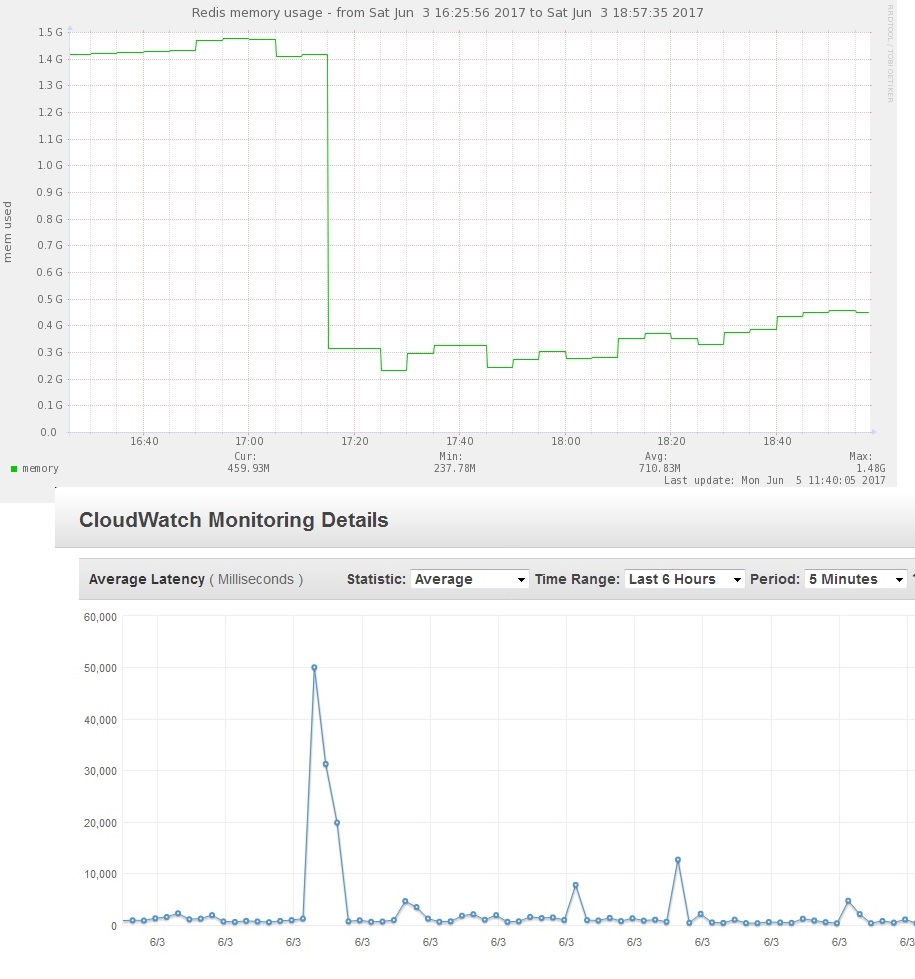

The above figure shows the bad response immediately when a FPC that had built to 1.5GB was cleared completely. The top image is from redis usage graph from munin and the one below is AWS cloudwatch latency (time to serve a page) averaged per minute. The latency came down as AWS Autoscale added more instances, costing money.

- Invalidating the cache :

Magento automatically invalidates FPC (internal or varnish) by tagging or hashing the content with keys that refer to the type of content. For example, it may generate a tag / hash CATEGORY_123 if the page depends on category 123. Now, when category 123 changes, Magento sends out a invalidate message that says “all pages that have tag / hash CATEGORY_123 should be invalid”. Magento has a elaborate tag convention. - FPC and robotic crawlers (BOTS)

Even if you do not use a crawler for warming, robotic crawlers on the internet (such as google’s indexer Googlebot) will start filling the FPC cache with pages they happen to crawl. It is our advice that a site with FPC should have robots.txt and a front end processor (nginx, WAF) restricting BOTs. - CPU and time needed to re-generate a page

A FPC can fully invalidate (clear) due to a (p)html or css file changing or partially due to a data change such as a product update. A miss from FPC results in the page being regenerated. The CPU requirement for a miss is much higher than a hit. If a crawler is used to warm the cache or if traffic is high, CPU requirement can be quite high as the FPC fills up. Yet, the visitor experience is not good during this period. Using autoscale, this performance degradation can be contained to some extent as additional instances are launched to handle the high CPU requirement. - Discipline when using FPC – know when invalidation happens

It is important to add discipline for code update as it has the worst effect on user experience.- Code update should be done at low traffic times.

- Category changes should be carefully planned at low traffic times.

- Magento indexing should be set to manual (M1) or on schedule (M2) with a cron running the indexer.

Our recommendation for FPC

- Do not use a random crawler to warm the FPC cache. Use a page popularity based crawler to warm the cache if necessary.

- Avoid using a crawler during high traffic – the crawler will compete for system resources with live traffic

- If possible update code during low traffic times as it causes FPC to invalidate

- If your site is horizontally scaled, pre-launch instances to your load balancer before invalidating FPC, either explicitly or indirectly, so the latency of starting an instance does not further worsen the user experience

Magento 1.x FPC Plugins

- Free Lesti FPC : https://github.com/GordonLesti/Lesti_Fpc. Use this guide to install

- Magento connect search results for FPC

Should FPC be a part of scaling strategy?

FPC is concerned with speed. Scale is concerned with the process that helps the site add resources when needed. FPC helps in scalability by reducing the use of resources per hit to the website under certain conditions. It changes the dynamics of when and how many resources will be needed.

FPC has to be considered to be part of scaling strategy – but as one of many parts.

Read part 2 where we discuss other Magento caches.

Read the overview of our Magento scaling series here.