Choosing Hosting platform for Magento : Digital Ocean

One in a while you may want to check on the technology and options available for Magento hosting – technologies change, hosting costs reduce, life becomes easier for you and the merchant. In this multi-part series we will explore cloud platforms and their suitability to hosting a production Magento website.

In this article we will explore how seriously you should consider Digital Ocean as a platform for hosting.

Digital Ocean (DO) offers VMs it calls “droplets”. With an excellent blog and a friendly attitude towards developers, DO is a serious hosting contender. Many developers naturally recommend using DO to merchants for hosting Magento. How real is it? Let me explore a few pros and cons.

DO Choice of vCPU and memory

DO offers “shared CPU” and “dedicated CPU”. For eCommerce hosting we always prefer dedicated CPU. Shared CPUs work differently on all cloud providers and quite often when you need it the most, you do not get enough. Each vCPU is a Intel hyper-thread. As of this writing (July 2020), we see use of Intel Xeon Gold 6140 @ 2.30 GHz with DDR4 memory at 2.6 GHz. Our simple memory speed test showed about 1.8GHz effective throughput to memory.

DO disk : Love / hate relationship!

I love the disk speed – you get good SSD performance with no throttling. Even for attached storage. Unlike other cloud platforms, you don’t have to juggle with figuring out how many IOPS you need, once you understand for that platform how IOPs translates to speed.

To test the disk I use a simple but effective method to test the disk speed. I create a file with /dev/zero using dd of 1GB. dd gives me the write speed. Here is the command I use :

dd if=/dev/zero of=$tempFile bs=1M count=1024 conv=sync oflag=direct

tempFile has the path to a temp file in the mounted disk I am testing

For both direct disk as well as mounted block device we see 200-350 Mbps disk speeds across all droplets our customers use. This is the best we have seen in cloud platforms. Physical hardware can give upto 450Mbps speeds.

So, why the hate?

We have seen disk crashes – thankfully on staging servers. So, when it comes to production servers, we always recommend customers to have a DR plan to minimize loss of data when this happens. Our suspicion is that the storage is not in a managed RAID, hence a disk crash is a droplet specific event.

Network

File transfer speed test

: A large file transfer using scp on the internal network is done in about 130 MB/sec – about a 1 Gb/sec speed.

NFS

NFS of block storage performs poorly. We use nfscache so most reads are served from cache. However, if there is a need for a large number of reads or writes, the performance can drop dramatically.

Examples of NFS hurting performance

Magento 1 : As images are created in the frontend, there is a check to see if an image file exists before an image url is included in a page. This invariably results in slowdown.

Magento 1 and 2 : large log files. Magento stores log files in a shared folder. Multiple lines per hit of logs will result in slowdown.

Experience : We were in the process of taking a Magento 2 website live that had about 2500 errors written to a log file. The issue was related to M1 migration resulting in some attributes not defined in M2. App servers saw 30% CPU in I/O wait and the site came to a crawl. Php access logs showed under 10% CPU utilization.

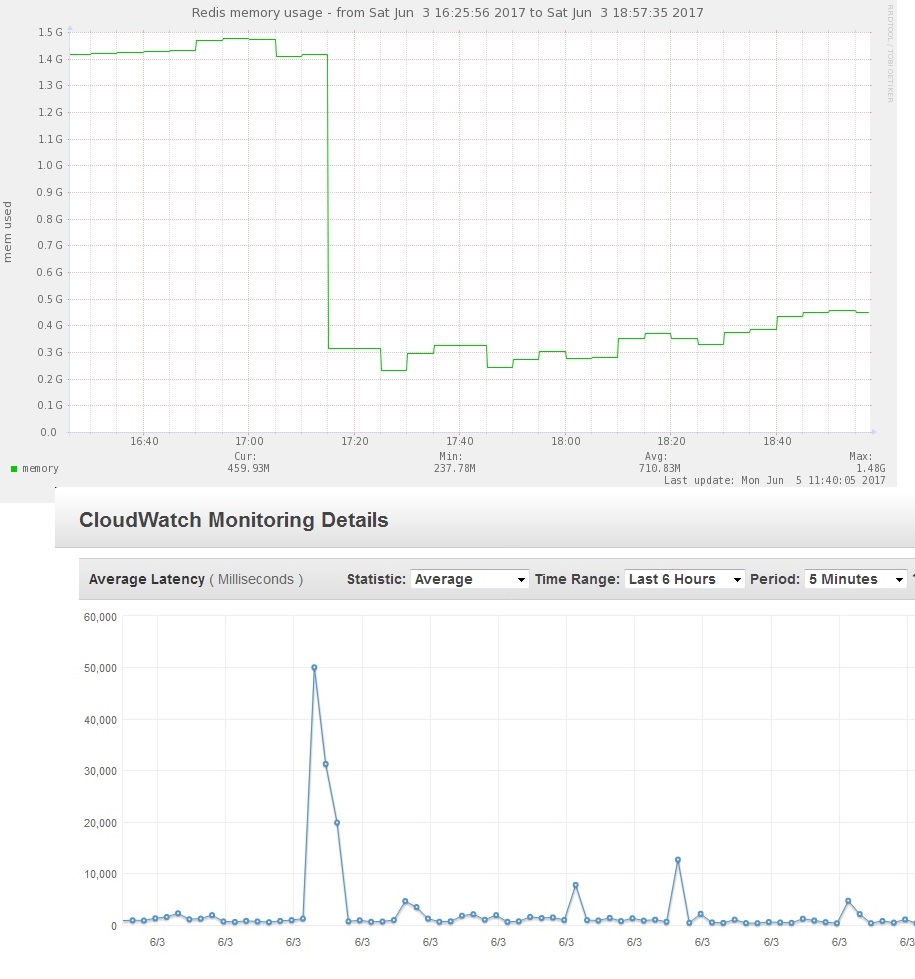

Non availability of autoscale

DO does not come with an autoscale option (you could get autoscale in their Kubernetes which we are not considering here). This means you may have to keep capacity for a holiday sale for example.

Managing server costs

Server costs even when you shut them down

DO charges for servers that are shut down. If you do not want to be charged fully for a server, storing an image is an option.

Recommendation

We host customers on Digital Ocean if they do not have a need for scaling and are willing to have a DR plan. This generally increases the cost of the overall solution.

Also stores that do not need scaling such as :

- PWA – Vue-storefront based stores which use nodejs

- A high hit ratio varnish FPC magento store (depends on many factors, but essentially requirement for scaling multiple app servers is less likely)